2024

hi

Rokhmanova, N., Martus, J., Faulkner, R., Fiene, J., Kuchenbecker, K. J.

GaitGuide: A Wearable Device for Vibrotactile Motion Guidance

Workshop paper (3 pages) presented at the ICRA Workshop on Advancing Wearable Devices and Applications Through Novel Design, Sensing, Actuation, and AI, Yokohama, Japan, May 2024 (misc) Accepted

hi

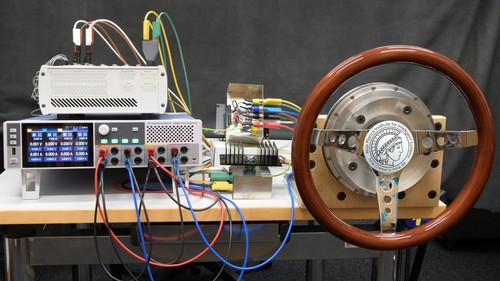

Javot, B., Nguyen, V. H., Ballardini, G., Kuchenbecker, K. J.

CAPT Motor: A Strong Direct-Drive Rotary Haptic Interface

Hands-on demonstration presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Multimodal Haptic Feedback

Extended abstract (1 page) presented at the IEEE RoboSoft Workshop on Multimodal Soft Robots for Multifunctional Manipulation, Locomotion, and Human-Machine Interaction, San Diego, USA, April 2024 (misc)

hi

Fazlollahi, F., Seifi, H., Ballardini, G., Taghizadeh, Z., Schulz, A., MacLean, K. E., Kuchenbecker, K. J.

Quantifying Haptic Quality: External Measurements Match Expert Assessments of Stiffness Rendering Across Devices

Work-in-progress paper (2 pages) presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic Wearable Devices for Expressive and Salient Haptic Feedback

Hands-on demonstration presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

hi

Serhat, G., Kuchenbecker, K. J.

Fingertip Dynamic Response Simulated Across Excitation Points and Frequencies

Biomechanics and Modeling in Mechanobiology, April 2024 (article) Accepted

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Demonstration: Cutaneous Electrohydraulic (CUTE) Wearable Devices for Expressive and Salient Haptic Feedback

Hands-on demonstration presented at the IEEE RoboSoft Conference, San Diego, USA, April 2024 (misc)

hi

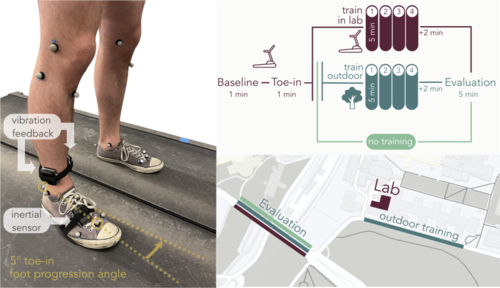

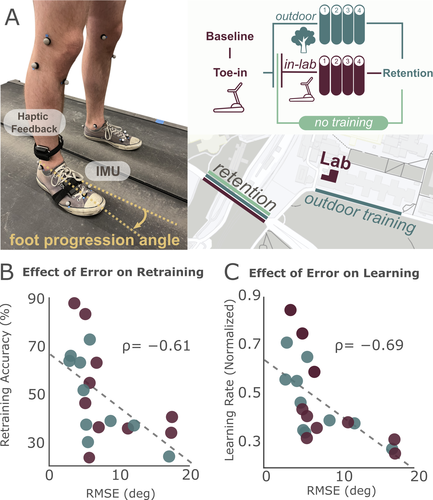

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

IMU-Based Kinematics Estimation Accuracy Affects Gait Retraining Using Vibrotactile Cues

IEEE Transactions on Neural Systems and Rehabilitation Engineering, 32, pages: 1005-1012, February 2024 (article)

hi

Schulz, A., Serhat, G., Kuchenbecker, K. J.

Adapting a High-Fidelity Simulation of Human Skin for Comparative Touch Sensing in the Elephant Trunk

Abstract presented at the Society for Integrative and Comparative Biology Annual Meeting (SICB), Seattle, USA, January 2024 (misc)

hi

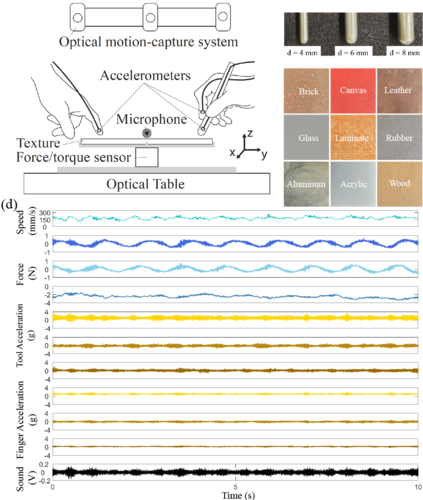

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

MPI-10: Haptic-Auditory Measurements from Tool-Surface Interactions

Dataset published as a companion to the journal article "Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise" in IEEE Transactions on Haptics, January 2024 (misc)

hi

Fitter, N. T., Mohan, M., Preston, R. C., Johnson, M. J., Kuchenbecker, K. J.

How Should Robots Exercise with People? Robot-Mediated Exergames Win with Music, Social Analogues, and Gameplay Clarity

Frontiers in Robotics and AI, 10(1155837):1-18, January 2024 (article)

zwe-csfm

hi

Schulz, A., Kaufmann, L., Brecht, M., Richter, G., Kuchenbecker, K. J.

Whiskers That Don’t Whisk: Unique Structure From the Absence of Actuation in Elephant Whiskers

Abstract presented at the Society for Integrative and Comparative Biology Annual Meeting (SICB), Seattle, USA, January 2024 (misc)

hi

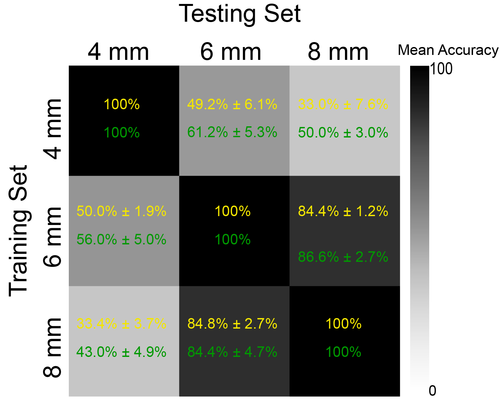

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise

IEEE Transactions on Haptics, 17(1):58-65, January 2024, Presented at the IEEE Haptics Symposium (article)

zwe-csfm

Gorke, O., Stuhlmüller, M., Tovar, G. E. M., Southan, A.

Unravelling parameter interactions in calcium alginate/polyacrylamide double network hydrogels using a design of experiments approach for the optimization of mechanical properties

Materials Advances, 5, pages: 2851-2859, Royal Society of Chemistry, 2024 (article)

ps

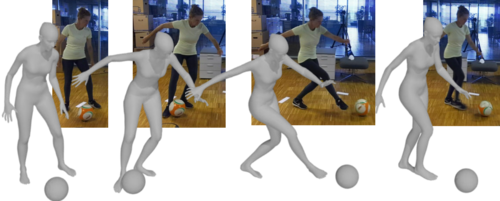

Huang, Y., Taheri, O., Black, M. J., Tzionas, D.

InterCap: Joint Markerless 3D Tracking of Humans and Objects in Interaction from Multi-view RGB-D Images

International Journal of Computer Vision (IJCV), 2024 (article)

al

Simon, A., Weimar, J., Martius, G., Oettel, M.

Machine learning of a density functional for anisotropic patchy particles

Journal of Chemical Theory and Computation, 2024 (article)

zwe-csfm

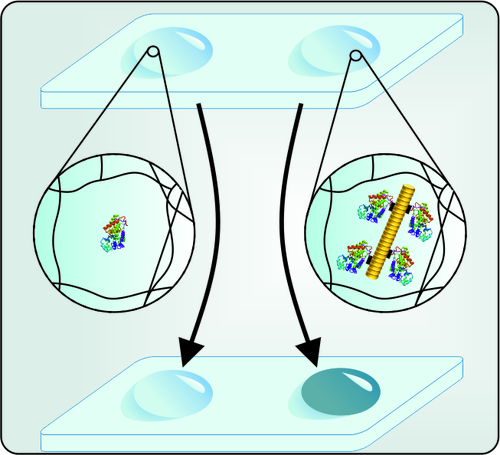

Grübel, J., Wendlandt, T., Urban, D., Jauch, C. O., Wege, C., Tovar, G. E. M., Southan, A.

Soft Sub-Structured Multi-Material Biosensor Hydrogels with Enzymes Retained by Plant Viral Scaffolds

Macromolecular Bioscience, 24(3):2300311, Wiley, 2024 (article)

ev

Xue, Y., Li, H., Leutenegger, S., Stueckler, J.

Event-based Non-Rigid Reconstruction of Low-Rank Parametrized Deformations from Contours

International Journal of Computer Vision (IJCV), 2024 (article)

ei

Tsirtsis, S., Tabibian, B., Khajehnejad, M., Singla, A., Schölkopf, B., Gomez-Rodriguez, M.

Optimal Decision Making Under Strategic Behavior

Management Science, 2024, Published Online (article) In press

ps

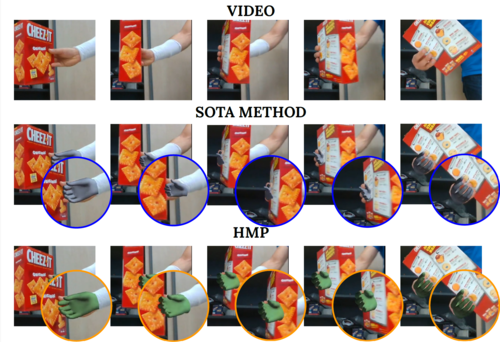

Duran, E., Kocabas, M., Choutas, V., Fan, Z., Black, M. J.

HMP: Hand Motion Priors for Pose and Shape Estimation from Video

Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2024 (article)

hi

Landin, N., Romano, J. M., McMahan, W., Kuchenbecker, K. J.

Discrete Fourier Transform Three-to-One (DFT321): Code

MATLAB code of discrete fourier transform three-to-one (DFT321), 2024 (misc)

2023

pio

Ruggeri, N., Lonardi, A., De Bacco, C.

Message-Passing on Hypergraphs: Detectability, Phase Transitions and Higher-Order Information

December 2023 (article)

ps

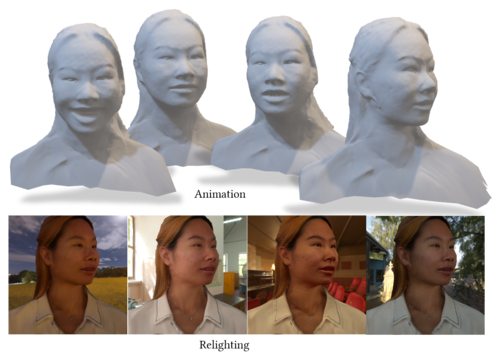

Bharadwaj, S., Zheng, Y., Hilliges, O., Black, M. J., Abrevaya, V. F.

FLARE: Fast learning of Animatable and Relightable Mesh Avatars

ACM Transactions on Graphics, 42(6):204:1-204:15, December 2023 (article) Accepted

ps

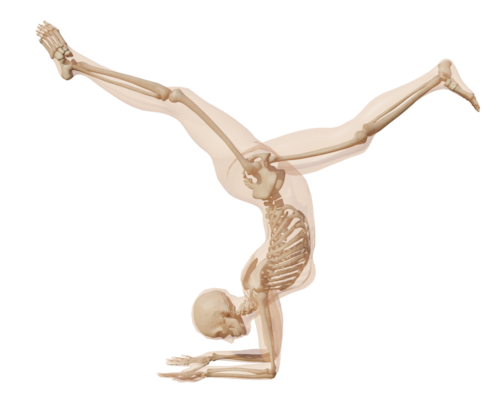

Keller, M., Werling, K., Shin, S., Delp, S., Pujades, S., Liu, C. K., Black, M. J.

From Skin to Skeleton: Towards Biomechanically Accurate 3D Digital Humans

ACM Transaction on Graphics (ToG), 42(6):253:1-253:15, December 2023 (article)

hi

L’Orsa, R., Lama, S., Westwick, D., Sutherland, G., Kuchenbecker, K. J.

Towards Semi-Automated Pleural Cavity Access for Pneumothorax in Austere Environments

Acta Astronautica, 212, pages: 48-53, November 2023 (article)

pio

Badalyan, A., Ruggeri, N., De Bacco, C.

Hypergraphs with node attributes: structure and inference

November 2023 (article) Submitted

rm

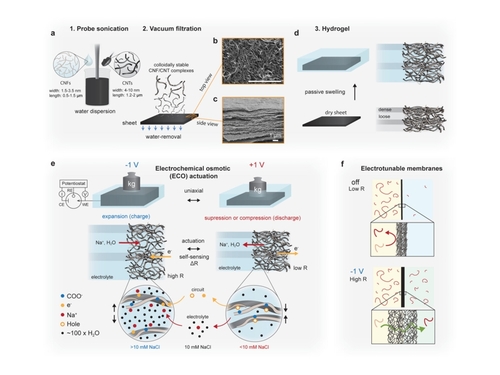

Benselfelt, T., Shakya, J., Rothemund, P., Lindström, S. B., Piper, A., Winkler, T. E., Hajian, A., Wågberg, L., Keplinger, C., Hamedi, M. M.

Electrochemically Controlled Hydrogels with Electrotunable Permeability and Uniaxial Actuation

Advanced Materials, 35(45):2303255, Wiley-VCH GmbH, November 2023 (article)

hi

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Seeking Causal, Invariant, Structures with Kernel Mean Embeddings in Haptic-Auditory Data from Tool-Surface Interaction

Workshop paper (4 pages) presented at the IROS Workshop on Causality for Robotics: Answering the Question of Why, Detroit, USA, October 2023 (misc)

ei

Hupkes, D., Giulianelli, M., Dankers, V., Artetxe, M., Elazar, Y., Pimentel, T., Christodoulopoulos, C., Lasri, K., Saphra, N., Sinclair, A., Ulmer, D., Schottmann, F., Batsuren, K., Sun, K., Sinha, K., Khalatbari, L., Ryskina, M., Frieske, R., Cotterell, R., Jin, Z.

A taxonomy and review of generalization research in NLP

Nature Machine Intelligence, 5(10):1161-1174, October 2023 (article)

hi

Allemang–Trivalle, A.

Enhancing Surgical Team Collaboration and Situation Awareness through Multimodal Sensing

Proceedings of the ACM International Conference on Multimodal Interaction (ICMI), pages: 716-720, Extended abstract (5 pages) presented at the ACM International Conference on Multimodal Interaction (ICMI) Doctoral Consortium, Paris, France, October 2023 (misc)

pio

Ibrahim, A. A., Muehlebach, M., De Bacco, C.

Optimal transport with constraints: from mirror descent to classical mechanics

September 2023 (article) Submitted

hi

Garrofé, G., Schoeffmann, C., Zangl, H., Kuchenbecker, K. J., Lee, H.

NearContact: Accurate Human Detection using Tomographic Proximity and Contact Sensing with Cross-Modal Attention

Extended abstract (4 pages) presented at the International Workshop on Human-Friendly Robotics (HFR), Munich, Germany, September 2023 (misc)

hi

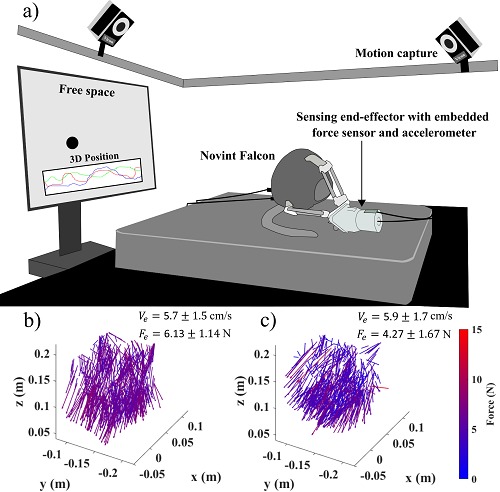

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test

IEEE Transactions on Automation Science and Engineering, pages: 1-16, August 2023 (article)

ei

Hawkins-Hooker, A., Visonà, G., Narendra, T., Rojas-Carulla, M., Schölkopf, B., Schweikert, G.

Getting personal with epigenetics: towards individual-specific epigenomic imputation with machine learning

Nature Communications, 14(1), August 2023 (article)

hi

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

The Role of Kinematics Estimation Accuracy in Learning with Wearable Haptics

Abstract presented at the American Society of Biomechanics (ASB), Knoxville, USA, August 2023 (misc)

pio

Della Vecchia, A., Neocosmos, K., Larremore, D. B., Moore, C., De Bacco, C.

A model for efficient dynamical ranking in networks

August 2023 (article) Submitted

al

hi

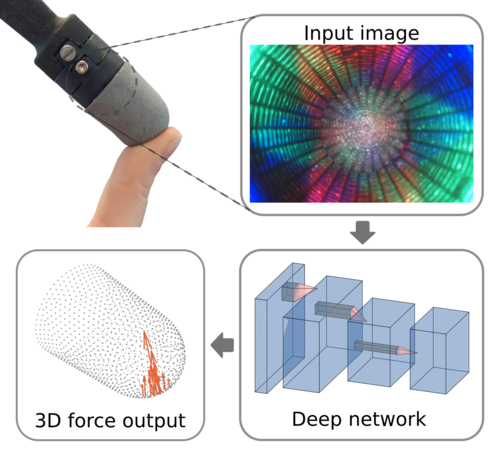

Andrussow, I., Sun, H., Kuchenbecker, K. J., Martius, G.

Minsight: A Fingertip-Sized Vision-Based Tactile Sensor for Robotic Manipulation

Advanced Intelligent Systems, 5(8):2300042, August 2023, Inside back cover (article)

hi

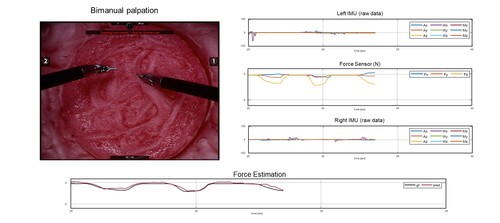

Lee, Y., Husin, H. M., Forte, M., Lee, S., Kuchenbecker, K. J.

Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data

IEEE Transactions on Medical Robotics and Bionics, 5(3):496-506, August 2023 (article)

ps

Rueegg, N., Zuffi, S., Schindler, K., Black, M. J.

BARC: Breed-Augmented Regression Using Classification for 3D Dog Reconstruction from Images

Int. J. of Comp. Vis. (IJCV), 131(8):1964–1979, August 2023 (article)

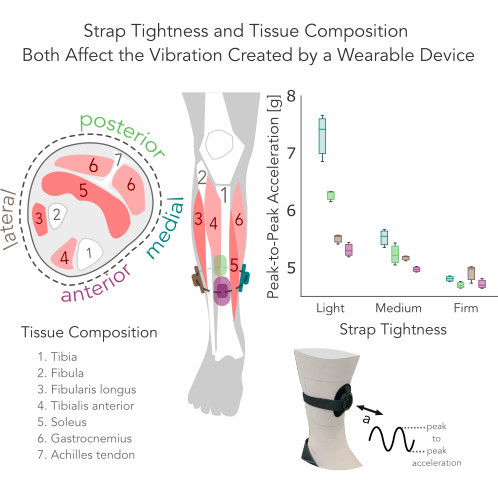

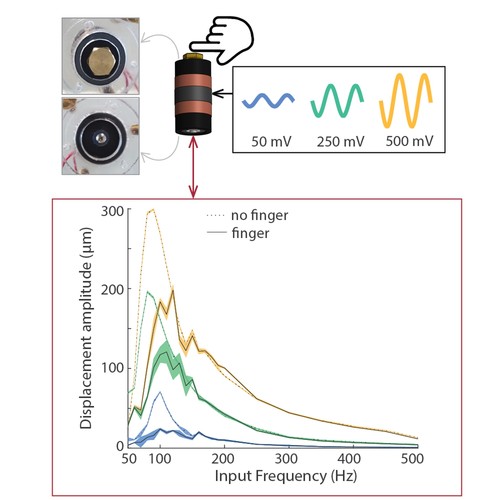

hi

Rokhmanova, N., Faulkner, R., Martus, J., Fiene, J., Kuchenbecker, K. J.

Strap Tightness and Tissue Composition Both Affect the Vibration Created by a Wearable Device

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

hi

Ballardini, G., Kuchenbecker, K. J.

Toward a Device for Reliable Evaluation of Vibrotactile Perception

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

hi

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test: Code

Code published as a companion to the journal article "Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test" in IEEE Transactions on Automation Science and Engineering, July 2023 (misc)

hi

Fazlollahi, F., Taghizadeh, Z., Kuchenbecker, K. J.

Improving Haptic Rendering Quality by Measuring and Compensating for Undesired Forces

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

hi

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Capturing Rich Auditory-Haptic Contact Data for Surface Recognition

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

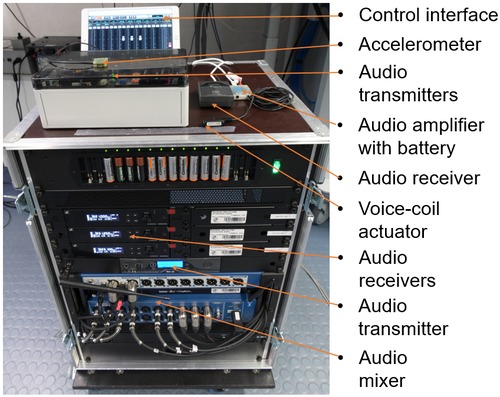

hi

Gong, Y., Javot, B., Lauer, A. P. R., Sawodny, O., Kuchenbecker, K. J.

AiroTouch: Naturalistic Vibrotactile Feedback for Telerobotic Construction

Hands-on demonstration presented at the IEEE World Haptics Conference, Delft, The Netherlands, July 2023 (misc)

ei

Ortiz-Jimenez*, G., de Jorge*, P., Sanyal, A., Bibi, A., Dokania, P. K., Frossard, P., Rogez, G., Torr, P.

Catastrophic overfitting can be induced with discriminative non-robust features

Transactions on Machine Learning Research , July 2023, *equal contribution (article)

pio

Lonardi, A., De Bacco, C.

Bilevel Optimization for Traffic Mitigation in Optimal Transport Networks

Physical Review Letter, July 2023 (article) Accepted

rm

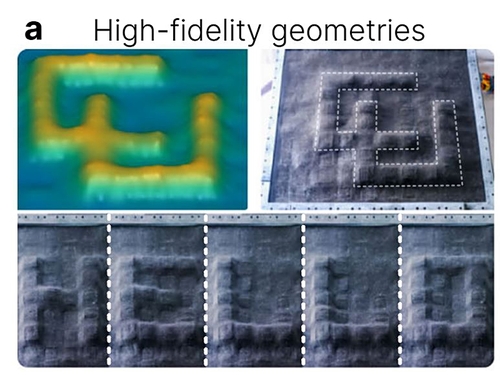

Johnson, B. K., Naris, M., Sundaram, V., Volchko, A., Ly, K., Mitchell, S. K., Acome, E., Kellaris, N., Keplinger, C., Correll, N., Humbert, J. S., Rentschler, M. E.

A Multifunctional Soft Robotic Shape Display with High-speed Actuation, Sensing, and Control

Nature Communications, 14(1), July 2023 (article)